Your robots.txt file is one of the most powerful yet dangerous tools in your SEO checklist.

It instructs search engine crawlers about which parts of your site they can and cannot access.

Get it right, and you’ll efficiently guide search engines to your best content while protecting sensitive areas.

And, if you get it wrong, you could accidentally block your entire website from Google, a mistake that has cost businesses millions in lost organic traffic.

Recent studies show that contradictory rules in robots.txt files lead to unpredictable results and can damage your SEO, while blocking too many pages can harm the indexing of your site.

In this guide, we’ll walk through the 21 most critical robots.txt issues that could be sabotaging your search rankings, plus proven strategies to fix them.

Key Takeaways

- Never just use Disallow: / as it blocks all search engine crawlers from your entire site.

- Syntax errors in robots.txt can prevent search engines from understanding your directives.

- Blocking CSS and JavaScript files hurts your site’s rendering and SEO performance.

- Contradictory rules create confusion and unpredictable crawling behavior.

- Missing or incorrect robots.txt files can waste your crawl budget.

- Regular testing and monitoring prevent costly robots.txt mistakes.

- Proper implementation can improve crawl efficiency.

- Common mistakes include blocking important pages and using the wrong syntax.

Understanding Robots.txt Fundamentals

Before diving into specific robots.txt issues to avoid, let’s establish why this small text file wields such enormous power over your SEO success.

Your robots.txt file acts as the first point of contact between search engine crawlers and your website.

Located at your domain’s root (yoursite.com/robots.txt), it tells crawlers which parts of your site they can access and which areas are off-limits.

Moreover, its job is to follow the Robots Exclusion Protocol (REP), a set of standards that tells web robots (like Googlebot) which URLs they are allowed to crawl.

Think of it as a guide for managing your crawl budget.

Every site has a limited amount of time and resources that Google will dedicate to crawling it.

A well-configured robots.txt file helps you optimize this budget by telling crawlers:

- “Hey, don’t waste your time on these low-value pages (like internal search results or login pages).”

- “Instead, focus your energy on my awesome blog posts and product pages over here!”

Crucially, it is not a mechanism for security.

Blocking a directory in robots.txt does not prevent a user from navigating to it, nor does it guarantee a malicious bot will obey the rules.

More importantly, it doesn’t prevent Google from indexing a page if you build backlinks from elsewhere on the web.

You can also read: In-Content vs. Navigation Linking: Understanding the Difference.

Why are Robots.txt Issues so Damaging?

It’s very easy to make mistakes, such as blocking an entire site after a new design or CMS is rolled out, or not blocking sections of a site that should be private.

These seemingly small errors can have devastating consequences:

- Traffic Impact: A single misplaced directive can block your entire website from Google, resulting in immediate traffic drops.

- Revenue Loss: E-commerce sites have lost millions in revenue after accidentally blocking product pages or categories.

- Recovery Time: Fixing robots.txt issues doesn’t guarantee immediate recovery – it can take weeks or months for Google to recrawl and re-index your content.

- Soft 404s and Indexing Issues: If many pages are blocked by robots.txt, search engines may treat them as soft 404 errors, which can slow down the crawl rate over time but do not directly hurt rankings unless they lead to a significant waste of crawl budget.

How to Find Your Robots.txt File

Before you can fix any robots.txt mistakes, you need to know how to find your robots.txt file. It’s simple.

- Direct URL: Just type your root domain into your browser and add /robots.txt at the end. (Example: https://www.yourwebsite.com/robots.txt).

- Google Search Console (GSC): If you have GSC set up (and you absolutely should), you can easily find and test your file.

-

- (Go to Settings > Crawling > Crawl stats).

- Click on the “View robots.txt” link in the “Hosts” section. This will show you the exact version of the file that Google last crawled.

If you see a 404 error, it means you don’t have one. If you see a file, it’s time to check it for errors.

Now, here are the 21 critical Robot.txt issues and how to fix them.

Critical Syntax and Formatting Issues

1. Incorrect File Encoding and Format

One of the most fundamental robots.txt mistakes involves file encoding.

Your robots.txt file must be saved as plain text with UTF-8 encoding.

Using rich text formats, adding hidden characters, or saving with incorrect encoding creates parsing errors.

Common Mistake:

# This file was created with Microsoft Word and contains hidden formatting

User-agent: *

Disallow: /admin/

Correct Format:

# Plain text file, UTF-8 encoding, no hidden characters

User-agent: *

Disallow: /admin/

2. Case Sensitivity Errors

URLs in robots.txt directives are case-sensitive, but many webmasters forget this crucial detail.

This leads to ineffective blocking or allowing of content.

Problematic Example:

User-agent: *

Disallow: /Blog/

Disallow: /ADMIN/

Better Approach:

User-agent: *

Disallow: /blog/

Disallow: /Blog/

Disallow: /admin/

Disallow: /ADMIN/

3. Wildcard Misuse

Incorrect wildcard usage is among the most common robots.txt issues.

The asterisk (*) and dollar sign ($) have specific meanings that many users misunderstand.

Wrong Wildcard Usage:

User-agent: *

Disallow: /*.pdf*

Correct Implementation:

User-agent: *

Disallow: /*.pdf$

The dollar sign ensures the directive only applies to URLs ending with .pdf, not those containing .pdf anywhere in the path.

4. Missing Protocol Specifications

Failing to account for both HTTP and HTTPS versions of your site creates gaps in your robots.txt coverage.

Incomplete Coverage:

Sitemap: http://example.com/sitemap.xml

Complete Coverage:

Sitemap: https://example.com/sitemap.xml

Sitemap: http://example.com/sitemap.xml

Content Blocking Mistakes

5. Accidentally Blocking Important Pages

The most catastrophic robots.txt mistake is unintentionally blocking pages you want indexed.

This often happens during site migrations or when implementing broad blocking rules.

Dangerous Broad Blocking:

User-agent: *

Disallow: /wp-

This rule blocks all WordPress directories, including potentially important content in custom post types or plugins.

Safer Specific Blocking:

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-includes/

6. Blocking CSS and JavaScript Resources

It might seem logical to block crawler access to external JavaScripts and cascading stylesheets, but this practice severely hurts your SEO.

Google needs to render your pages properly to understand their content and user experience.

SEO-Damaging Mistake:

User-agent: *

Disallow: /css/

Disallow: /js/

Disallow: *.css

Disallow: *.js

SEO-Friendly Approach:

User-agent: *

# Allow CSS and JS for proper rendering

Allow: /css/

Allow: /js/

Disallow: /private/

7. Contradictory Rules

One common mistake in creating a robots.txt file is adding rules that contradict each other.

These conflicting directives create uncertainty about your intentions.

Contradictory Rules Example:

User-agent: *

Disallow: /blog/

Allow: /blog/

Clear, Consistent Rules:

User-agent: *

Allow: /blog/

Disallow: /blog/private/

8. Over-Restrictive Crawl Delay

Setting excessive crawl delays can severely limit how often search engines visit your site, potentially reducing your indexing frequency.

Too Restrictive:

User-agent: *

Crawl-delay: 86400

# This means crawlers wait 24 hours between requests

Balanced Approach:

User-agent: *

Crawl-delay: 1

# Reasonable 1-second delay between requests

Technical Implementation Errors

9. Multiple Robots.txt Files

There can only be one robots.txt file on your website.

If you save multiple files with the same name, it will create problems.

Also, having multiple robots.txt files across different directories confuses crawlers.

Problematic Setup:

/robots.txt

/blog/robots.txt

/shop/robots.txt

Correct Setup:

/robots.txt (single file at root level)

10. Incorrect HTTP Status Codes

If your server returns a server error (an HTTP status code in the 500s) for robots.txt, search engines won’t know which pages should be crawled.

This can cause crawlers to stop visiting your entire site.

Status Code Issues to Avoid:

500 Internal Server Error

502 Bad Gateway

503 Service Unavailable

Proper Implementation:

200 OK for existing robots.txt

404 Not Found for missing robots.txt (acceptable)

11. Malformed Sitemap URLs

Including incorrect or inaccessible sitemap URLs in your robots.txt file wastes crawl budget and creates indexing inefficiencies.

Problematic Sitemap References:

Sitemap: /sitemap.xml

Sitemap: http://old-domain.com/sitemap.xml

Sitemap: https://example.com/broken-sitemap.xml

Proper Sitemap Implementation:

Sitemap: https://example.com/sitemap.xml

Sitemap: https://example.com/sitemap-images.xml

Sitemap: https://example.com/sitemap-news.xml

Crawl Budget and Performance Issues

12. Not Managing Infinite Loops

Failing to block URL parameters that create infinite crawling loops wastes precious crawl budget and can overwhelm your server.

Infinite Loop Creators:

Pagination: /page/1/page/2/page/3/…

Session IDs: ?sessionid=123456

Tracking parameters: ?utm_source=…

Effective Loop Prevention:

User-agent: *

Disallow: /*?sessionid=

Disallow: /*?utm_

Disallow: /page/*/page/

13. Ignoring Faceted Navigation

E-commerce and content sites with faceted navigation often create millions of duplicate URLs that waste crawl budget.

Crawl Budget Wasters:

/products?color=red&size=large&brand=nike

/blog?category=seo&author=john&year=2024

Strategic Blocking:

User-agent: *

Disallow: /*?color=

Disallow: /*?size=

Disallow: /*?*&*&

You can also read: Does Google Sandbox Exist? Debunking the Mystery in SEO.

14. Not Blocking Search Result Pages

Internal search result pages rarely provide value to search engines and consume significant crawl budget.

Budget-Wasting URLs:

/search?q=robots.txt

/?s=seo+tips

Efficient Blocking:

User-agent: *

Disallow: /search?

Disallow: /*?s=

Disallow: /*?q=

You can also read: What Technology Do Search Engines Use to Crawl Websites?

Security and Privacy Mistakes

15. Using Robots.txt for Security

The instructions in robots.txt files cannot enforce crawler behavior on your site; it’s up to the crawler to obey them.

Relying on robots.txt for security is a critical mistake because:

- The file is publicly accessible.

- Malicious crawlers can ignore it.

- It actually advertises sensitive directories.

Security Mistake:

User-agent: *

Disallow: /secret-admin-panel/

Disallow: /private-documents/

Disallow: /confidential-data/

Proper Security Approach:

- Use server-level access controls.

- Implement password protection.

- Use noindex meta tags for legitimate blocking.

16. Exposing Sensitive Information

Your robots.txt file is publicly visible, making it a poor choice for hiding sensitive areas of your site.

Information Exposure:

User-agent: *

Disallow: /employee-salaries/

Disallow: /internal-reports/

Disallow: /beta-product-launch/

Better Privacy Approach:

User-agent: *

Disallow: /temp/

Disallow: /staging/

# Use server-level restrictions for truly sensitive content

Mobile and International SEO Issues

17. Not Considering Mobile Crawlers

With mobile-first indexing, failing to optimize your robots.txt for mobile crawlers can impact your rankings significantly.

Mobile Crawler Awareness:

User-agent: Googlebot

Disallow: /no-mobile/

User-agent: Googlebot-Mobile

Disallow: /desktop-only/

Allow: /

You can also read: Google Removes Breadcrumbs From Mobile Search Results.

18. Blocking Hreflang Pages

If your robots.txt file is blocking URLs that have Hreflang tags for alternate language pages, Google will not crawl those pages to see the Hreflang URLs.

This breaks international SEO implementation.

International SEO Mistake:

User-agent: *

Disallow: /es/

Disallow: /fr/

Disallow: /de/

International SEO Best Practice:

User-agent: *

Allow: /es/

Allow: /fr/

Allow: /de/

Disallow: /es/private/

Disallow: /fr/private/

Disallow: /de/private/

Testing and Monitoring Problems

19. Not Testing Robots.txt Changes

Many robots.txt issues stem from implementing changes without proper testing.

Google Search Console provides a robots.txt tester that many webmasters ignore.

Testing Checklist:

- Use Google Search Console’s robots.txt tester.

- Test with different user agents.

- Verify crawl behavior in the search console.

- Monitor crawl stats after changes.

20. Ignoring Search Console Warnings

Check your robots.txt for errors in Google Search Console – the robots.txt check is in Settings.

Google provides specific warnings about robots.txt issues that many site owners overlook.

Key Warnings to Monitor:

- “Submitted URL blocked by robots.txt”.

- “Blocked by robots.txt” coverage issues.

- Crawl anomalies in crawl stats.

21. Not Monitoring Crawl Impact

Failing to monitor how robots.txt changes affect crawl behavior can mask serious issues until it’s too late.

Essential Monitoring Metrics:

- Pages crawled per day.

- Crawl budget utilization.

- Index coverage changes.

- Organic traffic fluctuations.

Thus, these are the critical robot.txt issues you should avoid.

You can also read: 12 SEO Myths Debunked: What You Really Need to Know.

WordPress Robots.txt Files: FAQs

Here are some frequently asked questions about robots.txt, especially for WordPress CMS.

1. Why Is Robots.txt Important for WordPress?

Controls Bot Access: It tells bots which directories or files to avoid, such as admin areas or plugin directories, protecting sensitive information.

Influences SEO: Properly configured, it helps search engines index your site more efficiently. Misconfigurations can harm your rankings.

Reduces Server Load: By limiting unnecessary bot traffic, you can reduce server load and bandwidth usage.

You can also read: 10 Best WordPress Speed Optimization Plugins (Free & Paid) in 2025.

2. Where Should the WordPress Robots.txt File Be Located?

The robots.txt file must be placed in the root directory of your website (e.g., https://example.com/robots.txt). If it’s missing from this location, bots will assume there are no restrictions and crawl freely.

3. How Do I Create or Edit a Robots.txt File in WordPress?

There are several methods to create and edit the robots.txt file in WordPress:

- Manual Creation: Create a text file named robots.txt in your website’s root directory and edit it directly.

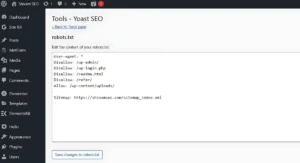

- Using WordPress Plugins: Popular SEO plugins like Yoast SEO and All in One SEO (AIOSEO) allow you to create and edit your robots.txt file from your WordPress dashboard.

- Yoast SEO: Go to SEO → Tools → File Editor.

- AIOSEO: Go to All in One SEO → Tools → Robots.txt Editor. Toggle “Enable Custom Robots.txt” to edit.

4. What Should a Good WordPress Robots.txt File Look Like?

A typical, well-optimized WordPress robots.txt file might look like this:

User-agent: *

Allow: /wp-content/uploads/

Disallow: /wp-admin/

Disallow: /wp-includes/

Disallow: /readme.html

Sitemap: https://example.com/sitemap_index.xml

This example allows bots to index uploaded files, blocks access to admin and core directories, and points to your sitemap

5. Can Bots Ignore WordPress Robots.txt?

Yes. While reputable search engines like Google and Bing generally follow robots.txt rules, malicious bots or certain SEO automation tools may ignore them. Robots.txt is not a security tool, so sensitive pages should be protected with authentication or other security measures.

6. How Long Does It Take for Changes to Take Effect?

Search engines like Google may take up to 24 hours to process changes to your WordPress robots.txt file.

You can also read: 5 Trusted SEO-Friendly WordPress Themes in 2025.

Conclusion

Robots.txt issues can silently destroy years of SEO progress, but they’re entirely preventable.

Start by auditing your current robots.txt file using Google Search Console’s tester tool.

Moreover, check for the common mistakes outlined in this guide, and implement the solutions that apply to your site.

Remember these key principles:

- Test every change before implementation.

- Monitor crawl behavior continuously.

- Keep your robots.txt file simple and clear.

- Never rely on robots.txt for security.

- Regular audits prevent major disasters.

Your robots.txt file should work as an invisible traffic director, efficiently guiding search engines to your best content while protecting areas that don’t belong in search results.

When implemented correctly, it becomes a powerful ally in your SEO strategy.

Need help with a complex robots.txt situation? Contact us and share your specific challenge with our SEO expert.